“An expert knows all the answers if you ask the right questions.” - Claude Lévi-Strauss

That idea hits home in today’s AI-driven world. Prompt engineering is all about asking the right questions. It’s part art, part science, and it focuses on crafting inputs that help large language models, such as GPT-4, Gemini, and Claude, do their best work.

It sounds technical, but at its core, it’s something much more familiar.

At its core, prompt engineering is about clear communication. It’s knowing how to guide the AI by giving it the right information. When that clicks, AI turns into a powerful tool. It can help with writing, teaching, solving problems, and making smarter decisions.

This shift puts the user in the driver’s seat. Instead of adapting to the tool, we shape how the tool responds to us. That change matters for user experience because prompt engineering makes AI more responsive, more helpful, and more focused on human needs.

So, What’s Going Wrong with Our Prompts?

Even though we’re the ones steering the conversation, our prompts don’t always land the way we expect. Sometimes the AI gives off-target answers, misses the point, or responds in a way that just feels... off. So what’s getting in the way?

Here are ten common reasons our prompts fall short:

Lack of Specificity

Vague prompts like "Write about marketing" can yield broad or irrelevant responses. Being specific helps the AI understand your exact needs.Overloading with Information

Cramming too much into a single prompt can confuse the AI. Breaking complex tasks into smaller, manageable prompts often leads to better results.Missing Context

Without sufficient background information, the AI may not grasp the nuances of your request, leading to generic or off-target outputs.Ambiguous Language

Using unclear or double-meaning words can cause the AI to misinterpret your intent. Clear and precise language is key.No Defined Goal

Prompts without a clear objective can result in unfocused responses. Clearly stating your desired outcome guides the AI effectively.Ignoring the AI's Capabilities

Expecting the AI to perform tasks beyond its training, like predicting future events, can lead to inaccurate or fabricated responses.Neglecting Audience and Purpose

Failing to tailor prompts to a specific audience or purpose can make the AI's response less relevant or effective.Lack of Examples

Providing examples within your prompt can help the AI understand the desired format or style, leading to more accurate or desirable outputs.Not Iterating

Assuming the first prompt will yield the perfect response is a common mistake. Iteratively refining your prompts can significantly improve results.Overcomplicating Prompts

Using overly complex or technical language can confuse the AI. Keeping prompts straightforward and clear enhances understanding.

Types of Prompts

Before we get into which techniques work best, it’s useful to understand the range of approaches people commonly use when prompting AI. Not all are equally effective in every situation, but seeing the variety helps show how flexible prompting can be.

Direct Instruction Prompts are clear and to the point. They tell the AI exactly what to do. These are a step up from what's often called vanilla prompts, which are the most basic kind. Vanilla prompts are usually short, with little to no structure or guidance.

Example 1: Write a usability test script for a mobile banking app targeting older adults above 65.

Example 2: List the top five accessibility issues commonly found in e-commerce websites.

Contextual Prompts give the AI some background so it can better understand what you’re after.

Example 1: The user is trying to complete a checkout on a slow internet connection. Suggest UX improvements that would make the process easier.

Example 2: Imagine you’re analyzing feedback from a travel app used mostly by solo travellers. Summarize the key pain points.

Role-Based Prompts assign the AI a role so it answers from a certain perspective.

Example 1: You are a UX researcher preparing for stakeholder interviews. Draft five open-ended questions to understand product vision.

Example 2: You are a UX designer at a fintech company. Describe how you would redesign the onboarding flow for a budgeting app.

Chain-of-Thought Prompts guide the AI to think step by step, which helps with complex ideas.

Example 1: Explain how to conduct a usability study for a new mobile feature, outlining each stage from planning to reporting.

Example 2: Describe the process of creating a persona based on survey data, from data collection to synthesis.

Few-Shot and Zero-Shot Prompts either include examples or none at all, depending on how much guidance the AI needs.

Example 1: (Few-shot) UX feedback: “The app crashes on login” → Issue: Stability. UX feedback: “Text is hard to read in dark mode” → Issue: Accessibility. UX feedback: “Too many steps to book an appointment” → Issue: Usability.

Now classify this feedback: “Users can’t tell if their payment went through.”

Example 2: (Zero-shot) Generate a usability checklist for reviewing a government services website.

“Prompt Therapy” is the fix we need. Let’s get to it.

Now that we have a clearer view of why prompts fail and the different types of techniques people commonly use, it’s time to talk about how to actually make them work better. That’s where prompt therapy comes in.

In practice, the best prompts are often a creative mix. You might combine context with a defined goal, assign a role to sharpen focus, or add examples and a step-by-step format to guide the output. Knowing the categories helps, but knowing how to blend them is what makes the difference.

To improve a prompt that isn't working, try these seven steps next time:

Clarify your goal

Be clear about what you’re asking for. If the AI can’t tell what you want, it’ll guess, and not always well.Simplify the task

If your prompt is trying to do too much, break it into smaller parts.Add relevant context

Help the AI understand the situation. Give it the background it needs without overloading it.Use a helpful role

If the task would benefit from a certain point of view, say so. A role can shape tone and focus.Provide examples when useful

Show what a good response looks like. This is especially helpful for formatting or tone.Encourage step-by-step reasoning

If the task is complex, ask the AI to walk through it in stages.Refine and iterate

Don’t expect the first version to be perfect. Adjust one thing at a time and build on what works.

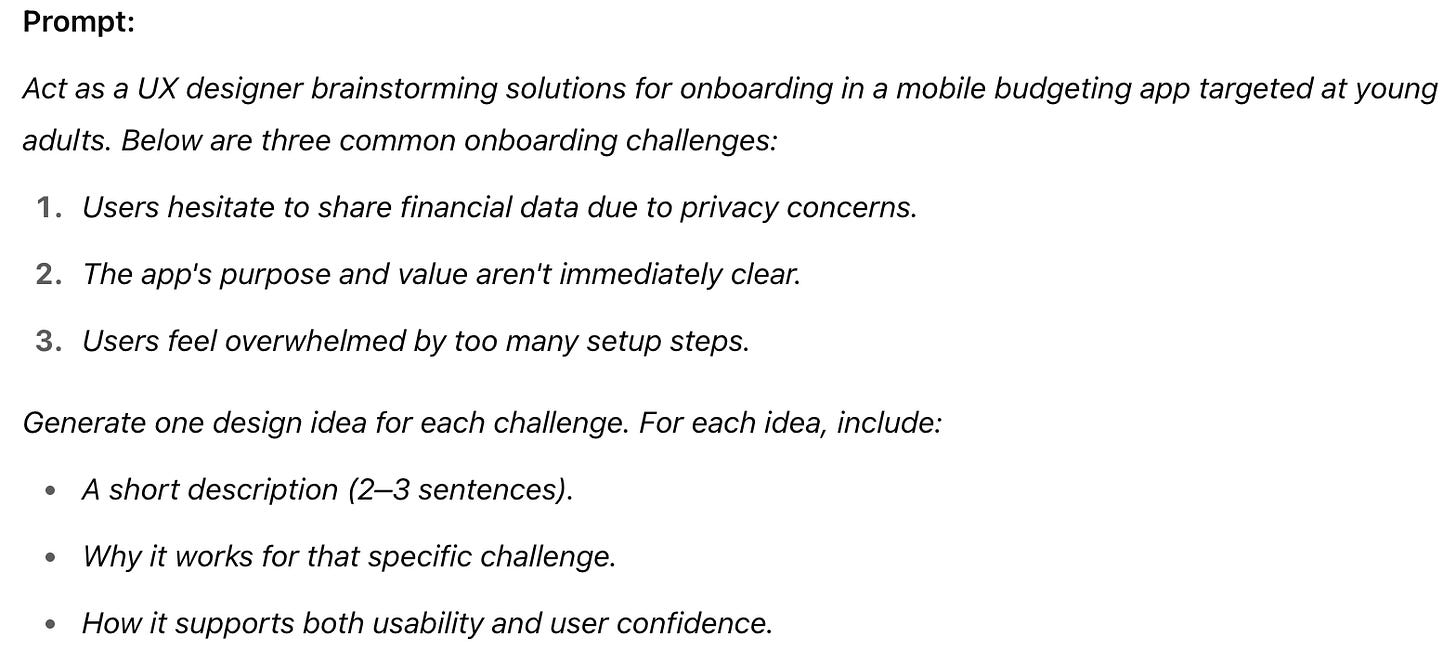

To put these ideas into action, let’s look at how a decently well-crafted prompt actually works. These two examples show how to combine clarity, context, structure, and purpose without leading the AI or relying on guesswork.

Example 1:

This is a solid prompt because it clearly defines the task, sets a realistic UX scenario, and includes structured guidance without being overly prescriptive. It encourages relevant, thoughtful ideas tied to real onboarding challenges. That said, there’s always room to improve.

Example 2:

Again, this prompt is a strong example of purposeful prompt design in a UX context. It defines a realistic design scenario, sets clear boundaries (no access to user data), and provides structure for a focused and thoughtful response. By asking for three features that solve real commuter problems, it guides the AI toward practical, user-centred outputs.

The format, i.e., feature, user problem, and improvement, encourages clarity without limiting creativity. The example shows how to structure a response without over-directing content.

Let’s Wrap it Up

In this post, we explored why prompts often miss the mark, outlined common prompt types, and introduced a more intentional approach to prompting we’re calling prompt therapy. You saw how clarity, structure, and thoughtful combinations of techniques can lead to more useful, human-centred responses from AI.

Prompting well isn’t about following a fixed formula set in stone. It’s about understanding the task, the context, and the user, then shaping the prompt to fit.

If this topic speaks to you, we invite you to join us for our live AI Masterclass on Wednesday June 11, 2025. We’ll cover real-world examples, share practical strategies, and answer your questions live.

Thank you Professor Lennart and Professor Riza for treating our prompts with your prompt therapy tips :) I really liked the breakdown, especially the prompt types are explained very well.