Everyday Ethics in AI Design: The IBM Framework

Slowing Down to Ask Better Questions About What We Create

Imagine you’re in a hotel room, speaking to a virtual assistant. It understands your preferences, adjusts the lighting, recommends local attractions. Seems convenient, even impressive. But pause for a moment: how did this assistant learn to speak your language? How does it decide what to recommend? And what happens to everything you say?

These questions aren’t exclusively technical. They’re also ethical. And today, we’re going to explore a powerful framework for thinking about them: IBM’s "Design for AI" framework. And just real quick before we get started, we’re running a webinar in which we teach you to future-proof your UX skills. Join us.

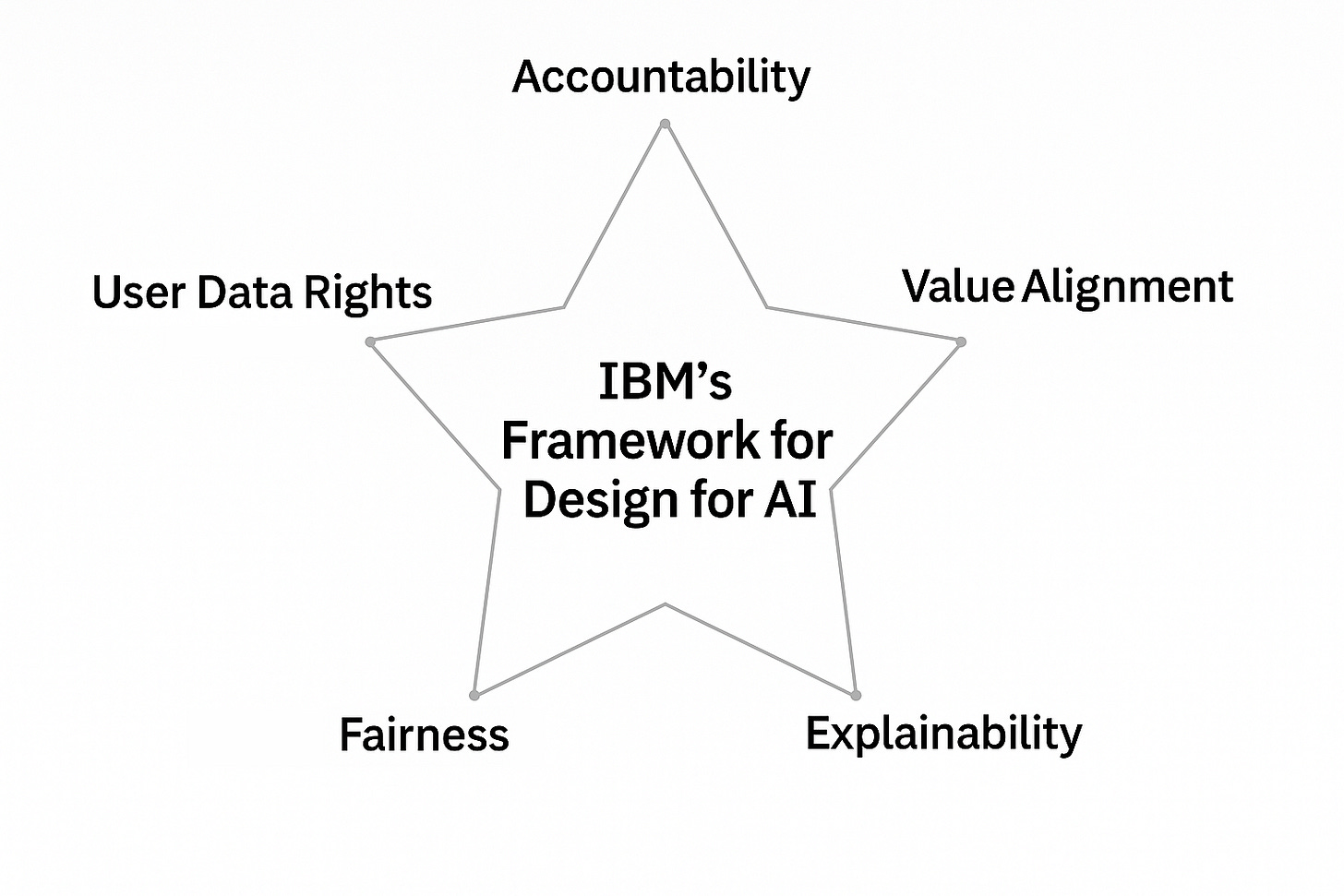

This framework is structured around five focal areas that every responsible AI project should consider: accountability, value alignment, explainability, fairness, and user data rights.

Now, if you’re a UX researcher or designer, you might be thinking: isn’t this more relevant to developers? Not quite. In fact, you play a crucial role in shaping these decisions.

You’re the ones who interface with users, interpret their behaviours, and translate their needs into the architecture of systems. You’re the ethical interpreters as much as you are design strategists.

So, as we go over the items in this framework, we want you to keep in mind that this isn’t just a checklist we’re going through. It’s a mindset. And it’s one that empowers you to build not just usable systems, but systems that are more human-centered.

1. Accountability: AI Doesn’t Build Itself

“Nearly 50% of the surveyed developers believe that the humans creating AI should be responsible for considering the ramifications of the technology. Not the bosses. Not the middle managers. The coders.” Mark Wilson, Fast Company on Stack Overflow’s Developer Survey Results 2018

Behind every AI system is a team of humans making choices. Some big and obvious, like what data to use or which algorithm to train. Others are small but equally powerful, like how a user interface nudges someone toward a decision.

And this means that accountability isn’t abstract. It’s distributed. It’s shared. It’s yours, mine, the team's, the company's. No one gets to opt out.

Too often, we hear people talk about AI as if it’s autonomous in a way that removes human responsibility. But that framing creates a dangerous distance between the outcome of a system and the humans who shaped it.

Here’s the truth: algorithms don’t decide what’s ethical. People do.

So what does real accountability look like?

It means starting every project by clarifying who is responsible for what across design, development, deployment, and beyond.

It means keeping track of key decisions, especially ethical ones, so they can be revisited and understood later.

It means being honest about where your system’s influence begins and ends. What can it actually control? Where might it have unintended effects?

And it means knowing the relevant guidelines, whether that’s company policy, data protection laws, or broader ethical standards like IEEE’s Ethically Aligned Design.

2. Value Alignment: Whose Values Are You Designing For?

“If machines engage in human communities as autonomous agents, then those agents will be expected to follow the community’s social and moral norms. A necessary step in enabling machines to do so is to identify these norms. But whose norms?”

— The IEEE Global Initiative on Ethics of Autonomous and Intelligent Systems

That last line, “but whose norms?” is a real challenge. Value alignment isn’t about reaching consensus. It’s about listening carefully, representing responsibly, and being transparent about the choices you’re making.

Every AI system operates with assumptions about what’s appropriate, useful, helpful, or fair. These assumptions don’t appear out of nowhere. They come from the teams building the system and the decisions they make along the way. Whether explicitly or implicitly, your system reflects a set of values.

But human values aren’t neatly packaged. They’re shaped by culture, history, context, and identity. The same feature that feels respectful to one person might feel invasive to another. A tone that seems polite in one country might come across as dismissive somewhere else.

So if you’re serious about building responsible AI, you need to invest early and intentionally in understanding whose values are shaping your system and who might be excluded.

Here’s how thoughtful teams approach this:

They collaborate with social scientists, ethicists, linguists, and community stakeholders.

They use field research to engage with users, not just to validate ideas, but to surface unspoken norms.

They acknowledge that values shift over time and build in processes to adapt accordingly.

And they use mapping tools like the Ethics Canvas to chart tensions and trade-offs explicitly.

3. Explainability: If People Don’t Understand It, They Won’t Trust It

“Companies must be able to explain what went into their algorithm’s recommendations. If they can’t, their systems shouldn’t be on the market.”

— Data Responsibility at IBM

Let’s be honest: most people don’t trust what they don’t understand.

And when it comes to AI, that understanding often breaks down quickly. Even for technically fluent users, it can be hard to tell how a system made a recommendation or why it chose one option over another. For everyday users, it can feel like guessing in the dark.

That’s where explainability comes in.

Explainability means that people interacting with your system can get a meaningful, honest, and understandable sense of what the system is doing, why it’s doing it, and based on what.

This doesn’t mean showing a wall of logs or technical graphs. It means designing in a way that helps users make sense of the system’s behavior, especially when the stakes are high.

For example, if an AI assistant suggests a restaurant or denies a request, users should be able to ask, “Why this one?” and get a clear answer.

Here’s what strong practice in explainability looks like:

Build in moments of transparency throughout the experience, not just in the fine print.

Give users a way to ask questions, challenge outputs, or request clarification.

Log system decisions in ways that are accessible to your team, and, where appropriate, to end users.

Tailor explanations to the audience. A guest in a hotel doesn’t need the same kind of detail as a system engineer or regulator.

Explainability also supports accountability. If no one can explain what the system is doing (even internally), then no one can take responsibility for its outcomes. It becomes ungovernable.

And here’s another point: invisible systems aren’t neutral systems. Just because something is seamless doesn’t mean it’s harmless. In fact, hiding complexity can make harm more likely, not less.

4. Fairness: Who Gets Left Out, and Why?

“By progressing new ethical frameworks for AI and thinking critically about the quality of our datasets and how humans perceive and work with AI, we can accelerate the [AI] field in a way that will benefit everyone.”

— Bias in AI: How we Build Fair AI Systems and Less-Biased Humans

Let’s talk about something many teams don’t realize until it’s too late:

Bias doesn’t need your permission to enter the system.

It arrives quietly, through training data, system defaults, assumptions baked into design. And if no one’s watching, it stays. It spreads. And eventually, it shapes outcomes that are discriminatory, exclusionary, or just plain wrong.

Fairness in AI is about asking, from the start: Who might be harmed by this system? Who might be overlooked? And what can we do to prevent that?

We are not talking just about legal compliance, which is, of course, super important. We are talking about equity. It’s about recognizing and acknowledging that the data we train on, the goals we optimize for, and the metrics we define are not neutral.

If your dataset underrepresents a group, your model might misclassify them.

If your recommendations are built from biased behavior, you’ll reinforce that bias.

If your design assumes “average” users, you risk building for no one in particular and excluding many in practice.

So how do we build with fairness in mind?

Audit your data sources. Understand who’s included, who’s missing, and why.

Diversify your team. Fairness starts with who’s in the room making the calls.

Design for correction. Build systems that can be challenged, updated, and improved based on real-world use and feedback.

Listen actively. Especially to edge cases, outliers, and people whose perspectives are least often heard.

At the end, we should add that fairness is not about achieving perfect equality in every output. Because that’s not always possible. But it is about taking responsibility for the system’s disparities and doing the work to minimize harm and maximize inclusion.

5. User Data Rights: Privacy Isn’t Just Policy. It’s Power.

“Individuals require mechanisms to help curate their unique identity and personal data in conjunction with policies and practices that make them explicitly aware of consequences resulting from the bundling or resale of their personal information.”

The IEEE Global Initiative on Ethics of Autonomous and Intelligent Systems

Let’s end this post with one of the most sensitive and consequential aspects of AI design: how we handle user data.

If your system collects personal information (e.g., location, voice input, behavior patterns), you’re not just managing technical resources. You’re making ethical decisions about autonomy, consent, and power.

Data practices aren’t invisible to users. They shape how people feel about your product. They affect whether someone opts in, opts out, or simply stops trusting the system altogether.

That’s why user data rights need to be part of your design logic from day one, not something handed off to legal after the interface is finished.

Here’s what responsible data stewardship looks like:

Give users meaningful, ongoing control over what they share. Not just a one-time agreement, but the ability to revisit or change decisions over time.

Be specific and transparent about what’s being collected and how it will be used. Avoid vague language or hidden clauses.

Make it easy to opt out or delete data, without friction, without delays.

Build in safeguards: encryption, access limits, and regular audits to ensure no one accesses data they shouldn’t.

Design for clarity, not just compliance. A user shouldn’t need to be a lawyer to understand your privacy practices.

Too many systems treat privacy like a footnote, something to check off for regulation. But users can tell when they’re being respected. They can also tell when they’re not.

Conclusion

In this post, we learned that ethical AI design requires more than just technical skill; it calls for a mindset that prioritizes responsibility, transparency, and respect for human values. We explored IBM’s “Design for AI” framework, which outlines five key areas: accountability, value alignment, explainability, fairness, and user data rights as practical lenses to guide our decisions. Whether you're a developer, researcher, or designer, your role carries ethical weight, and the choices you make shape not just how systems function but how they impact people’s lives.

Want to go deeper?

Join us for our upcoming AI Masterclass where we’ll explore each of these five ethical principles in action through real-world case studies, interactive discussions, and practical tools you can apply in your own projects. Register here!

Reference

IBM. (n.d.). Everyday ethics for artificial intelligence. IBM. Retrieved May 18, 2025, from https://www.ibm.com/design/ai/ethics/

This!!

UX/Product discussions around AI have been really limited to integration and not alignment. We've been gearing up to write a post on this ourselves.

AI alignment work and UX have so much in common (i.e. both come out of Big Tech, seeking to create products that align with human values), and there is so much value UX frameworks can bring to AI safety. UX frameworks probably need some updating, but they offer a valuable starting point.

Great post!