The first time I used an AI model like ChatGPT to generate product copy, I typed something simple. “Write onboarding text for a finance app.” The result? It worked. It was fine. Generic. Vague. Flat.

The second time, I tried: “Explain the value of saving regularly in a friendly tone.”

Better.

But the third time, I wrote:

“You are a UX writer for a finance app designed for college students. Write onboarding copy that sounds friendly, encouraging, and helps users feel like saving money is actually doable. Use fewer than 25 words.”

That prompt? It sang.

Now, what changed?

Not the AI. Not the technology.

What changed was how I framed the interaction. What changed was the design of the prompt.

And that’s what I want to talk about today:

What happens when we stop thinking of prompts as commands, and start thinking of them as designed conversations.

Designed Conversations? 🧐

In effect, every prompt defines a conversation with assumptions, expectations, and outcomes, and this is precisely why design thinking matters.

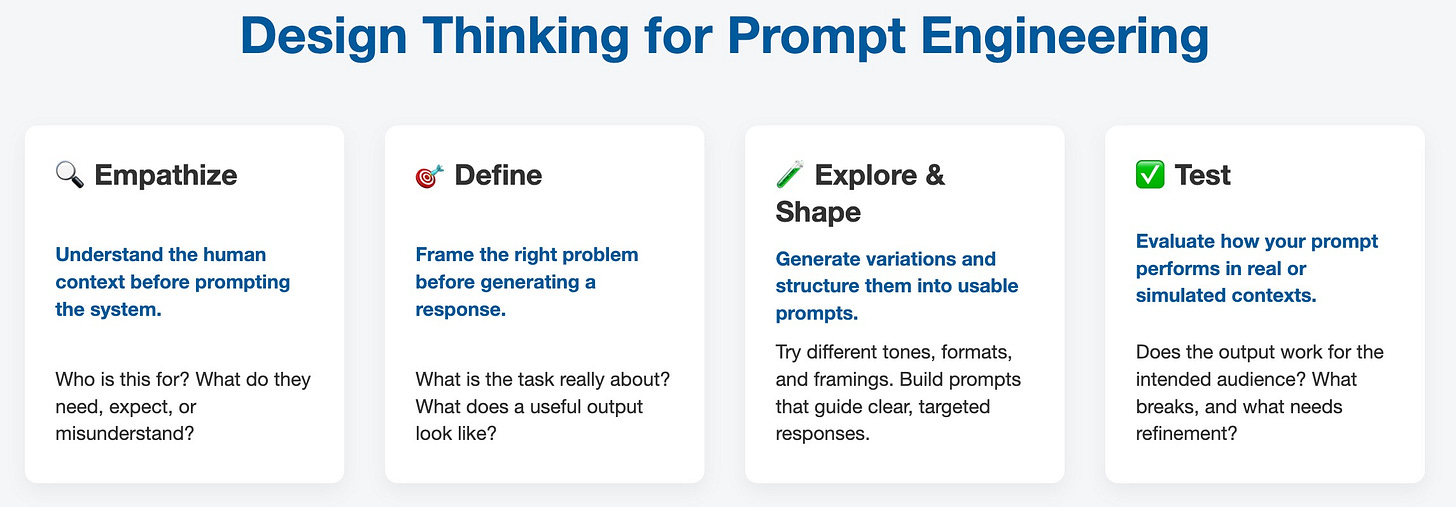

Let me guide you through how each classic Design Thinking principle maps onto prompt design. I’ll keep it straightforward, practical, and grounded in real use.

1. Empathize 👉🏼 Understand the human context before prompting the system

Before you write a prompt, especially one that asks the AI to play a role or generate something for a particular audience, you need clarity on the real human context behind the task.

Sometimes you're giving the AI a role to act in:

“You are a customer support agent...”

“You are a UX writer...”

Other times, you're designing an output for a specific person or group:

“Write this for a first-time user...”

“Summarize this for a policymaker...”

In both cases, it’s not enough to name the role or stakeholder. You need to understand what doing that job well actually means or what that stakeholder truly needs to receive.

Some important questions to ask here are:

Who is this prompt ultimately serving?

What do they value?

What might cause confusion, frustration, or mistrust?

What constraints or context shape how they’ll interpret the output?

Empathy here is not abstract. It's specific, situated, and tied to the real dynamics of the interaction you're trying to support.

👩🏻💻 Let’s look at a few examples to see how prompt clarity evolves with a stronger understanding of the user:

Bad prompt:

“Write error message for login failure.”

Better prompt:

“You are a UX writer. Write a login error message that’s clear and polite.”

Even better:

“Write a friendly, low-stress login error message for a user who may be anxious about security and not tech-savvy. Offer one clear next step.”

Each version shows a deeper awareness of who the message is for, how they might feel, and what they need next.

This is what it means to bring empathy into prompt design, not as a surface-level gesture but as the foundation of effective interaction.

2. Define 👉🏼 Frame the right problem before generating a response

After empathy comes clarity. If empathy is about understanding the human context, then “define” is about shaping that understanding into a clear, purposeful task.

A common mistake in prompt writing is to ask for output before clarifying the objective.

The result? Vague answers, generic suggestions, or misaligned tone. 😩😩😩

When you're using AI in UX work, you're often designing prompts to:

✅ Explain something

✅ Data Analysis

✅ Generate options

✅ Translate between technical and human language

✅ Simulate or summarize feedback

✅ Draft interaction flows or content variants

But if the task is too broad or framed from the wrong angle …

The AI fills in the gaps with guesswork.

So, the design move here is:

Be specific about the outcome, the audience, and the constraints.

You can enhance your prompt by asking the following questions:

What’s the real goal here? Not just the task, but the value behind it.

What would a misleading or incomplete answer look like?

👩🏻💻 Next, let’s have a look at some examples for better comprehension:

Bad prompt:

“Rewrite this button label.”

Better prompt:

“Suggest clearer labels for this button.”

Even better:

“Suggest three button label options for a checkout screen. The goal is to reduce cart abandonment and make it clear that the user can review their order before payment.”

The better the problem is defined, the more usable and targeted the AI's response becomes.

Design Thinking here reminds us that clarity at this stage prevents waste later.

In prompt design, that means:

Fewer vague answers

Less time spent rewording

More value from each interaction

So, the best summary of this phase is this:

Define the task like you're briefing a teammate. Because in a sense, you are.

3. Explore & Shape 👉🏼 Generate variations and structure them into usable prompts

UX teams rarely go with the first idea.

We sketch multiple layouts. We test multiple copy variants. We storyboard alternate flows. Prompt design should follow the same principle:

Don’t settle on a single version. Explore the space.

The goal here is to treat prompts like prototypes: quick, low-cost, and easy to test.

Try different structures. Different tones. Different constraints. Different assumptions about the user's goal or context.

This not only increases your chances of getting a useful output, but it also helps you better understand how the model responds to different kinds of input.

Some important questions to ask here are:

What’s another way I could phrase this prompt?

What tone would change the response?

What would a more visual, structured, or story-driven version of this prompt look like?

What assumptions is my prompt currently making, and can I vary them?

👩🏻💻 Next, let’s have a look at some examples for the following scenario:

You're writing microcopy for a delayed delivery message.

These are some of the options you have:

Prompt A:

“Write an apology message for a late order.”

Prompt B:

“You are a customer support rep. Write a polite apology message for a 30-minute delay.”

Prompt C:

“Write three tone variants for a delivery delay message: apologetic, humorous, and reassuring.”

Prompt D:

“Write a delivery delay message optimized for user trust. Include a realistic time window and an action they can take if they need help.”

Here, you're not testing the outputs just yet—you’re testing the framing of the prompt.

Each version represents a different design choice: tone, structure, level of guidance.

This kind of exploration is especially valuable in larger projects or more complex prompt workflows, where subtle changes in wording can lead to very different AI behavior.

Design Thinking teaches us not to fall in love with our first idea.

Prompt engineering rewards the same mindset. 💔💔💔

In fact, building a small prompt set (3 to 5 carefully varied prompts) is often more effective than endlessly tweaking one. So, the bottomline here is:

Think of ideation here as linguistic prototyping.

You’re designing interactions with words, not wireframes.

4. Test 👉🏼 Evaluate how your prompt performs in real or simulated contexts

Once you’ve shaped a prompt that looks good on paper (or in ChatGPT), it’s time to ask: does this actually work for the right person, at the right time, in the right context?

❌ Just because a prompt produces a fluent answer doesn’t mean it’s a good one. ❌

❌ Just because it seems “clear” to you doesn’t mean it will be effective in practice. ❌

Testing is where you treat your prompt like a real design artifact subject to usability, ambiguity, tone mismatch, and failure modes.

Some of the important questions to ask here are:

Does the output support a real task or decision?

Would an end-user find this understandable and usable?

What didn’t the AI consider that it should have?

What happens if you change the tone or remove the context?

You can also test variations side-by-side to compare:

Which version is more concise?

Which one sounds more on-brand?

Which version would a user trust more?

👩🏻💻 Next, let’s have a look at some examples for the following scenario:

You’re designing copy for a subscription cancellation screen.

Prompt A:

“Write cancellation confirmation text.”

Prompt B:

“Write a confirmation message for users canceling a subscription.

Tone: respectful, no guilt, offer option to reactivate.

Limit: 30 words.”

You test the outputs with users. You ask:

Do they feel respected or pressured?

Is the reactivation offer clear?

Do they understand what happens next?

Doing so, you're testing the prompt’s ability to guide useful output.

You can also test prompt resilience: 💪🏻💪🏻💪🏻

Does it hold up across different types of data?

What happens when the context is messy or incomplete?

Can someone else reuse your prompt and get a consistent result?

Wrap-Up Thought for Test

Design Thinking treats testing not as a verdict, but as a conversation.

Prompt testing works the same way. You’re learning how the AI behaves, where it makes assumptions, and how it supports or hinders human goals.

Final Remarks and Conclusion

Combining Design Thinking with prompt engineering offers a practical way to improve how we work with AI. It supports clearer outcomes, reduces unnecessary iteration, and encourages prompts that reflect real user needs.

As models grow more capable and token-wise more expensive, this mindset helps us stay focused and intentional. It is not about adding complexity, but about designing prompts with the same care we apply to any human-centered system.

If this topic speaks to you, we invite you to join us for our live AI Masterclass on Wednesday June 11, 2025. We’ll cover real-world examples, share practical strategies, and answer your questions live.